DP-201 Exam Question 81

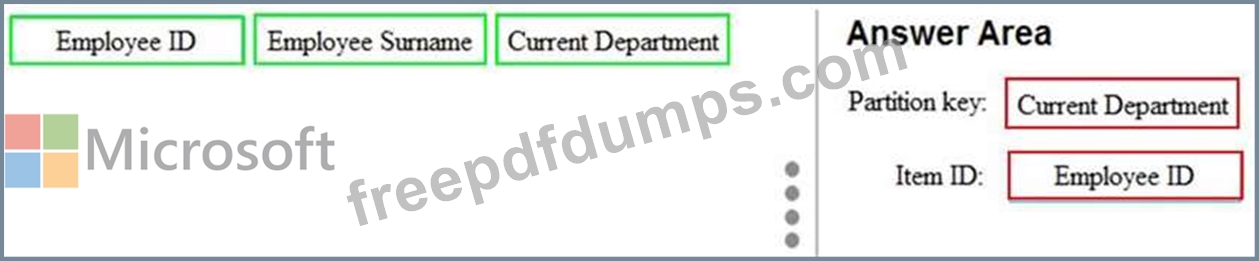

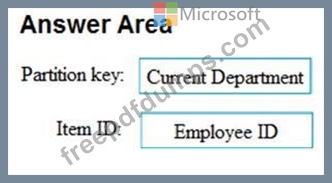

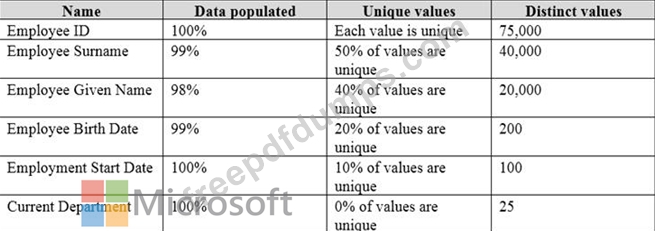

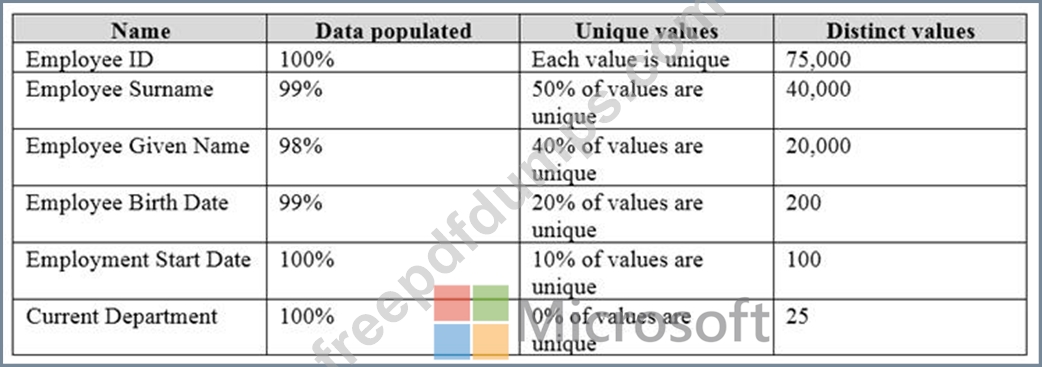

You have data on the 75,000 employees of your company. The data contains the properties shown in the following table.

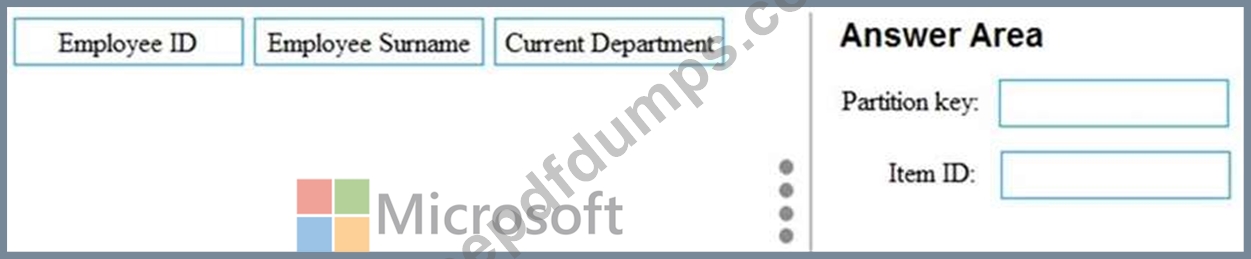

You need to store the employee data in an Azure Cosmos DB container. Most queries on the data will filter by the Current Department and the Employee Surname properties.

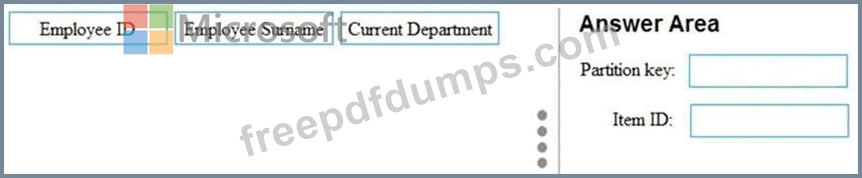

Which partition key and item ID should you use for the container? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to store the employee data in an Azure Cosmos DB container. Most queries on the data will filter by the Current Department and the Employee Surname properties.

Which partition key and item ID should you use for the container? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-201 Exam Question 82

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have streaming data that is received by Azure Event Hubs and stored in Azure Blob storage. The data contains social media posts that relate to a keyword of Contoso.

You need to count how many times the Contoso keyword and a keyword of Litware appear in the same post every 30 seconds. The data must be available to Microsoft Power BI in near real-time.

Solution: You create an Azure Stream Analytics job that uses an input from Event Hubs to count the posts that have the specified keywords, then and send the data to an Azure SQL database. You consume the data in Power BI by using DirectQuery mode.

Does the solution meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have streaming data that is received by Azure Event Hubs and stored in Azure Blob storage. The data contains social media posts that relate to a keyword of Contoso.

You need to count how many times the Contoso keyword and a keyword of Litware appear in the same post every 30 seconds. The data must be available to Microsoft Power BI in near real-time.

Solution: You create an Azure Stream Analytics job that uses an input from Event Hubs to count the posts that have the specified keywords, then and send the data to an Azure SQL database. You consume the data in Power BI by using DirectQuery mode.

Does the solution meet the goal?

DP-201 Exam Question 83

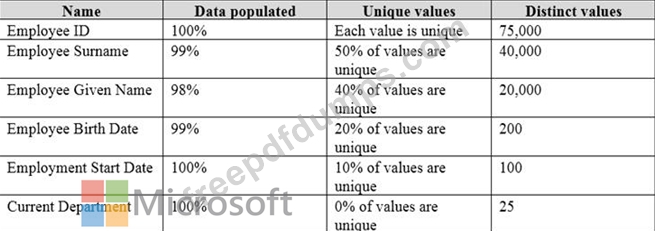

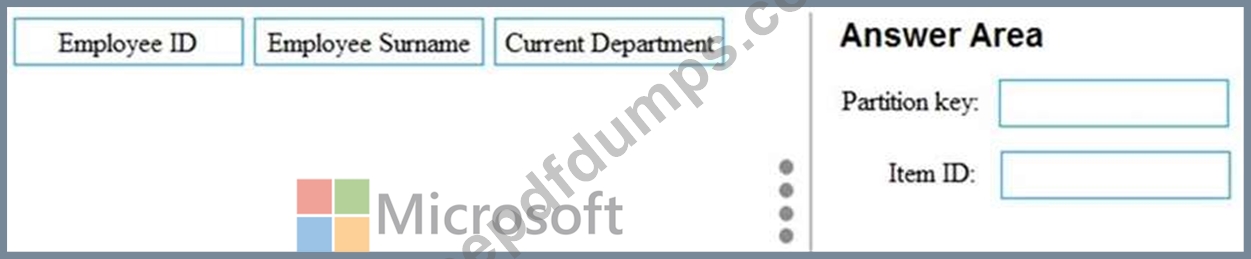

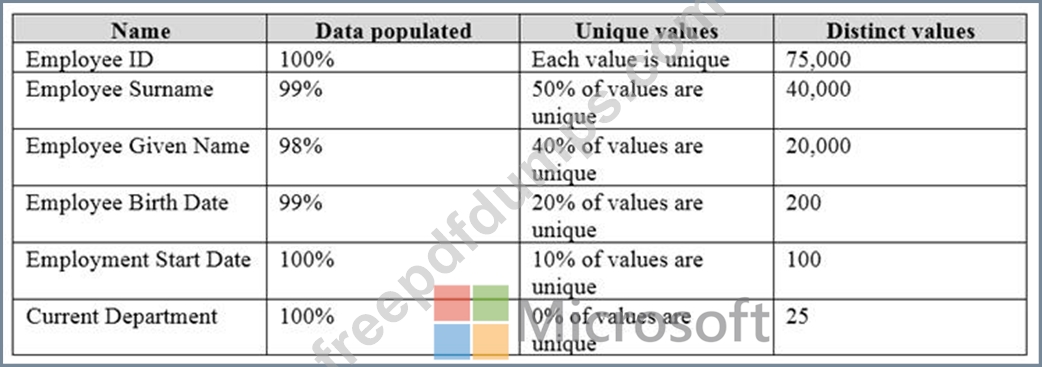

You have data on the 75,000 employees of your company. The data contains the properties shown in the following table.

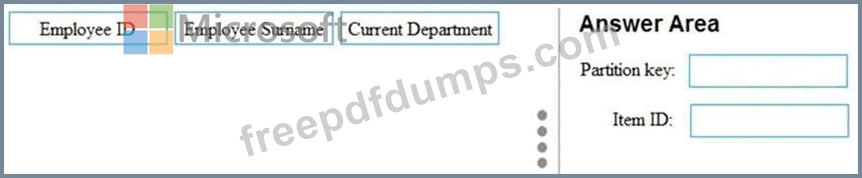

You need to store the employee data in an Azure Cosmos DB container. Most queries on the data will filter by the Current Department and the Employee Surname properties.

Which partition key and item ID should you use for the container? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You need to store the employee data in an Azure Cosmos DB container. Most queries on the data will filter by the Current Department and the Employee Surname properties.

Which partition key and item ID should you use for the container? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-201 Exam Question 84

You are planning an Azure solution that will aggregate streaming data.

The input data will be retrieved from tab-separated values (TSV) files in Azure Blob storage.

You need to output the maximum value from a specific column for every two-minute period in near real-time. The output must be written to Blob storage as a Parquet file.

What should you use?

The input data will be retrieved from tab-separated values (TSV) files in Azure Blob storage.

You need to output the maximum value from a specific column for every two-minute period in near real-time. The output must be written to Blob storage as a Parquet file.

What should you use?

DP-201 Exam Question 85

A company has a real-time data analysis solution that is hosted on Microsoft Azure. The solution uses Azure Event Hub to ingest data and an Azure Stream Analytics cloud job to analyze the data. The cloud job is configured to use 120 Streaming Units (SU).

You need to optimize performance for the Azure Stream Analytics job.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

You need to optimize performance for the Azure Stream Analytics job.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

Premium Bundle

Newest DP-201 Exam PDF Dumps shared by Actual4test.com for Helping Passing DP-201 Exam! Actual4test.com now offer the updated DP-201 exam dumps, the Actual4test.com DP-201 exam questions have been updated and answers have been corrected get the latest Actual4test.com DP-201 pdf dumps with Exam Engine here:

(207 Q&As Dumps, 30%OFF Special Discount: Freepdfdumps)