DP-201 Exam Question 11

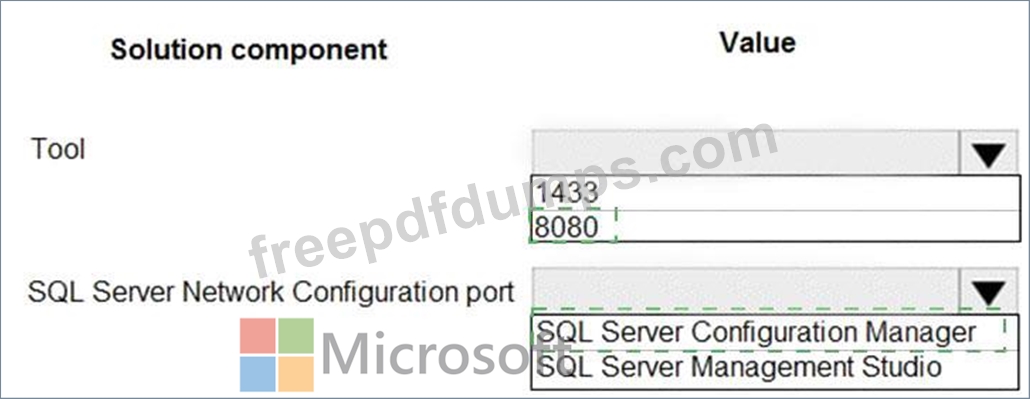

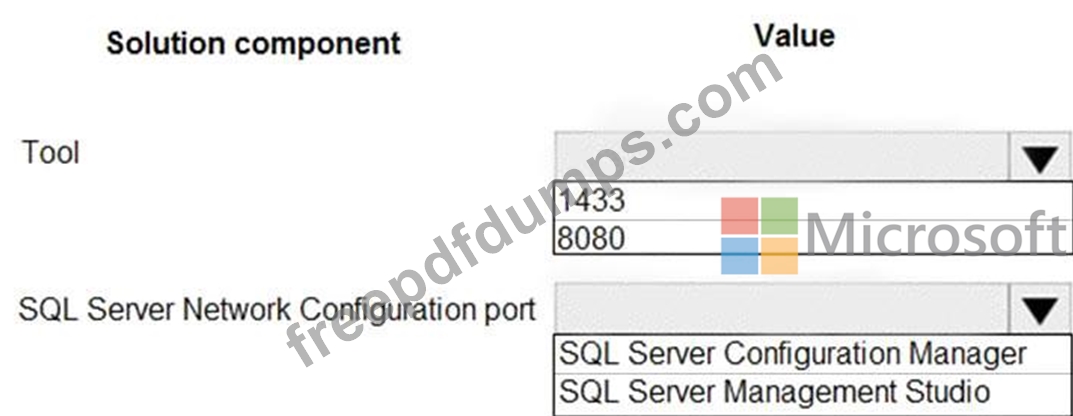

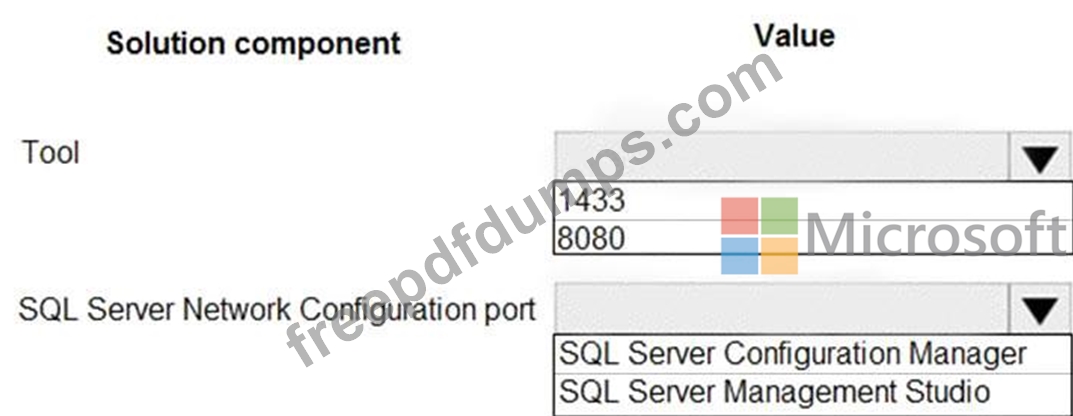

You need to design network access to the SQL Server data.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-201 Exam Question 12

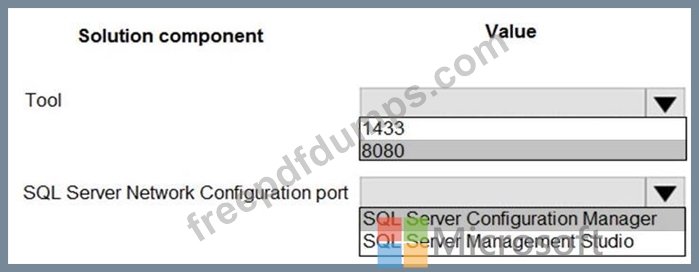

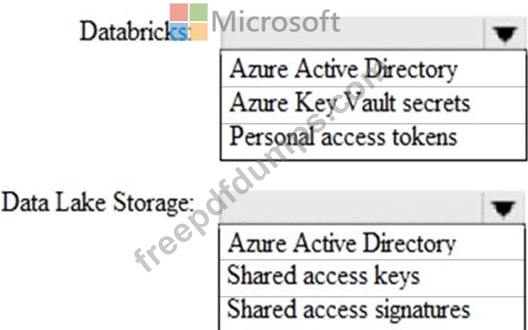

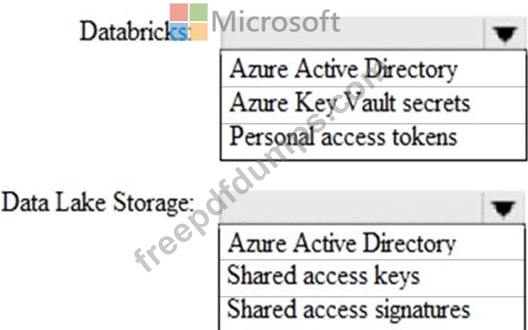

You use Azure Data Lake Storage Gen2 to store data that data scientists and data engineers will query by using Azure Databricks interactive notebooks. The folders in Data Lake Storage will be secured, and users will have access only to the folders that relate to the projects on which they work.

You need to recommend which authentication methods to use for Databricks and Data Lake Storage to provide the users with the appropriate access. The solution must minimize administrative effort and development effort Which authentication method should you recommend for each Azure service? To answer, select the appropriate options in the answer area NOTE: Each correct selection is worth one point.

You need to recommend which authentication methods to use for Databricks and Data Lake Storage to provide the users with the appropriate access. The solution must minimize administrative effort and development effort Which authentication method should you recommend for each Azure service? To answer, select the appropriate options in the answer area NOTE: Each correct selection is worth one point.

DP-201 Exam Question 13

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Data Lake Storage account that contains a staging zone.

You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You use an Azure Data Factory schedule trigger to execute a pipeline that executes an Azure Databricks notebook, and then inserts the data into the data warehouse.

Does this meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Data Lake Storage account that contains a staging zone.

You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You use an Azure Data Factory schedule trigger to execute a pipeline that executes an Azure Databricks notebook, and then inserts the data into the data warehouse.

Does this meet the goal?

DP-201 Exam Question 14

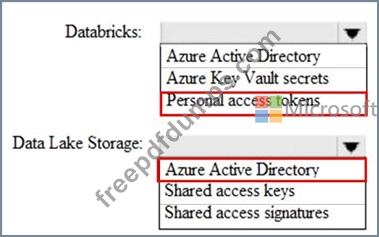

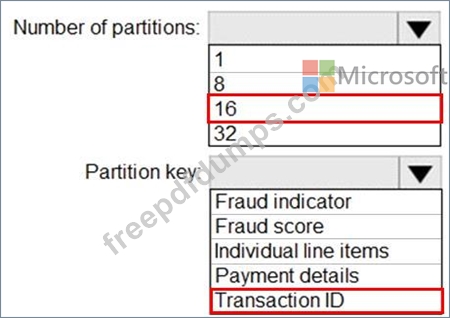

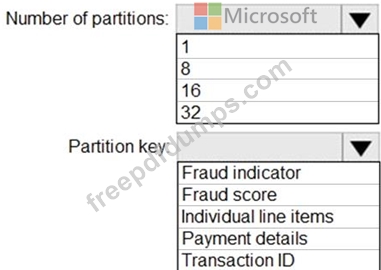

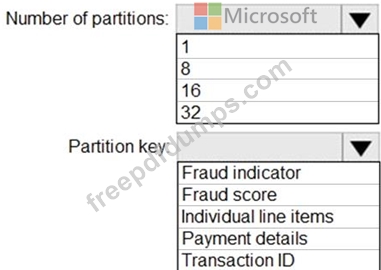

You have an Azure event hub named retailhub that has 16 partitions. Transactions are posted to retailhub. Each transaction includes the transaction ID, the individual line items, and the payment details. The transaction ID is used as the partition key.

You are designing an Azure Stream Analytics job to identify potentially fraudulent transactions at a retail store. The job will use retailhub as the input. The job will output the transaction ID, the individual line items, the payment details, a fraud score, and a fraud indicator.

You plan to send the output to an Azure event hub named fraudhub.

You need to ensure that the fraud detection solution is highly scalable and processes transactions as quickly as possible.

How should you structure the output of the Stream Analytics job? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You are designing an Azure Stream Analytics job to identify potentially fraudulent transactions at a retail store. The job will use retailhub as the input. The job will output the transaction ID, the individual line items, the payment details, a fraud score, and a fraud indicator.

You plan to send the output to an Azure event hub named fraudhub.

You need to ensure that the fraud detection solution is highly scalable and processes transactions as quickly as possible.

How should you structure the output of the Stream Analytics job? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-201 Exam Question 15

You are designing a storage solution for streaming data that is processed by Azure Databricks. The solution must meet the following requirements:

The data schema must be fluid.

The source data must have a high throughput.

The data must be available in multiple Azure regions as quickly as possible.

What should you include in the solution to meet the requirements?

The data schema must be fluid.

The source data must have a high throughput.

The data must be available in multiple Azure regions as quickly as possible.

What should you include in the solution to meet the requirements?

Premium Bundle

Newest DP-201 Exam PDF Dumps shared by Actual4test.com for Helping Passing DP-201 Exam! Actual4test.com now offer the updated DP-201 exam dumps, the Actual4test.com DP-201 exam questions have been updated and answers have been corrected get the latest Actual4test.com DP-201 pdf dumps with Exam Engine here:

(207 Q&As Dumps, 30%OFF Special Discount: Freepdfdumps)