DP-200 Exam Question 121

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution. Determine whether the solution meets the stated goals.

You develop a data ingestion process that will import data to a Microsoft Azure SQL Data Warehouse.

The data to be ingested resides in parquet files stored in an Azure Data Lake Gen 2 storage account.

You need to toad the data from the Azure Data Lake Gen 2 storage account into the Azure SQL Data Warehouse.

Solution:

1 Create a remote service binding pointing to the Azure Data late Gen 2 storage account.

2. Create an external file format and external table using the external data source.

3. Load the data using the CREATE TABLE AS SELECT statement.

Does the solution meet the goal?

You develop a data ingestion process that will import data to a Microsoft Azure SQL Data Warehouse.

The data to be ingested resides in parquet files stored in an Azure Data Lake Gen 2 storage account.

You need to toad the data from the Azure Data Lake Gen 2 storage account into the Azure SQL Data Warehouse.

Solution:

1 Create a remote service binding pointing to the Azure Data late Gen 2 storage account.

2. Create an external file format and external table using the external data source.

3. Load the data using the CREATE TABLE AS SELECT statement.

Does the solution meet the goal?

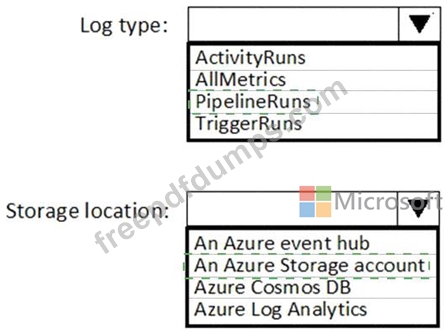

DP-200 Exam Question 122

You have an Azure subscription that contains the following resources:

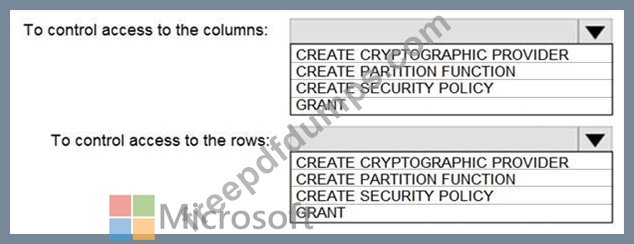

An Azure Active Directory (Azure AD) tenant that contains a security group named Group1 An Azure Synapse Analytics SQL pool named Pool1 You need to control the access of Group1 to specific columns and rows in a table in Pool1.

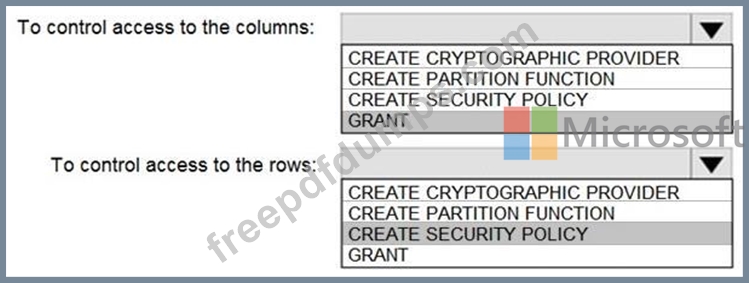

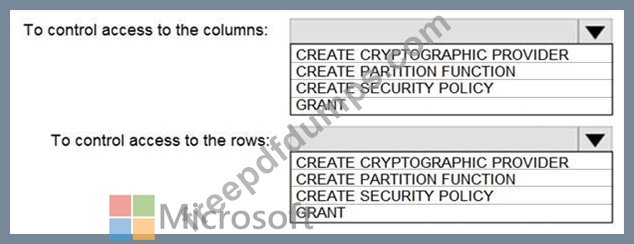

Which Transact-SQL commands should you use? To answer, select the appropriate options in the answer area.

An Azure Active Directory (Azure AD) tenant that contains a security group named Group1 An Azure Synapse Analytics SQL pool named Pool1 You need to control the access of Group1 to specific columns and rows in a table in Pool1.

Which Transact-SQL commands should you use? To answer, select the appropriate options in the answer area.

DP-200 Exam Question 123

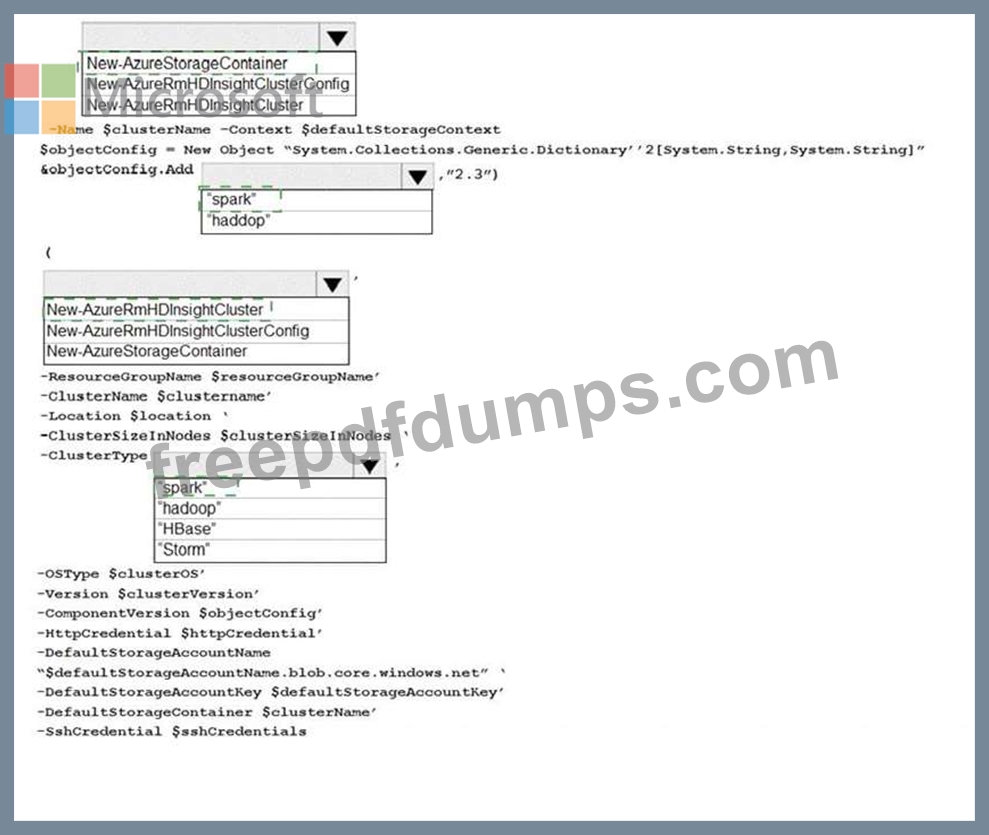

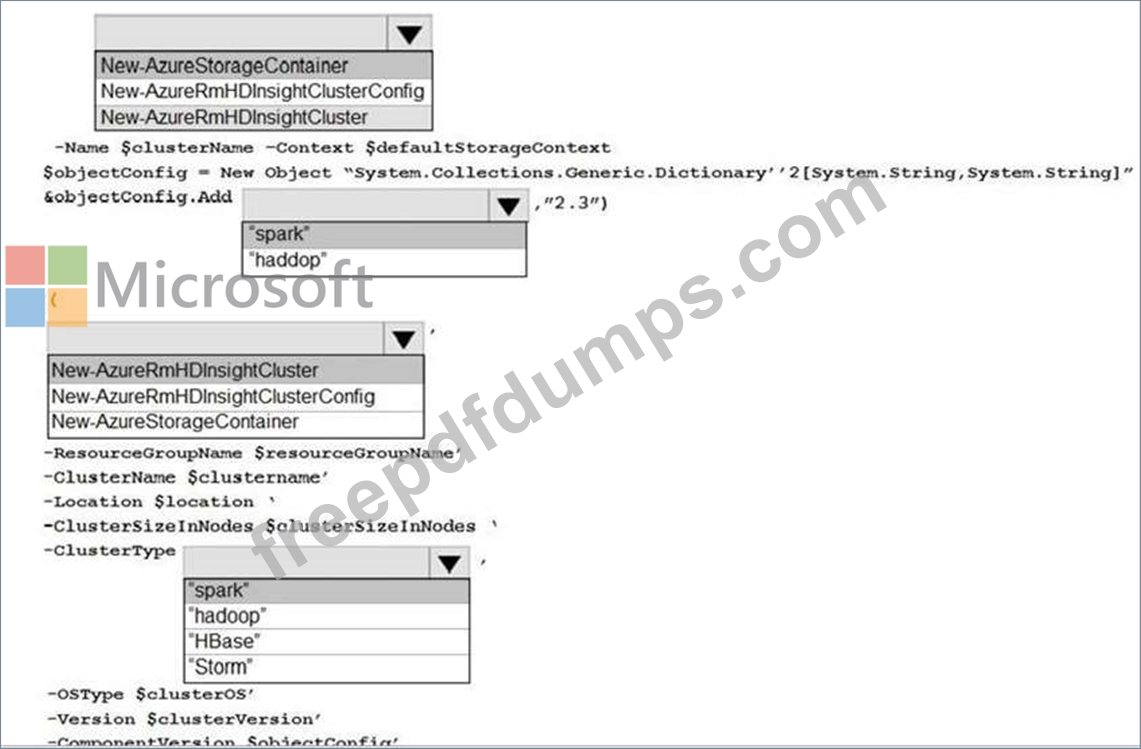

You develop data engineering solutions for a company.

A project requires an in-memory batch data processing solution.

You need to provision an HDInsight cluster for batch processing of data on Microsoft Azure.

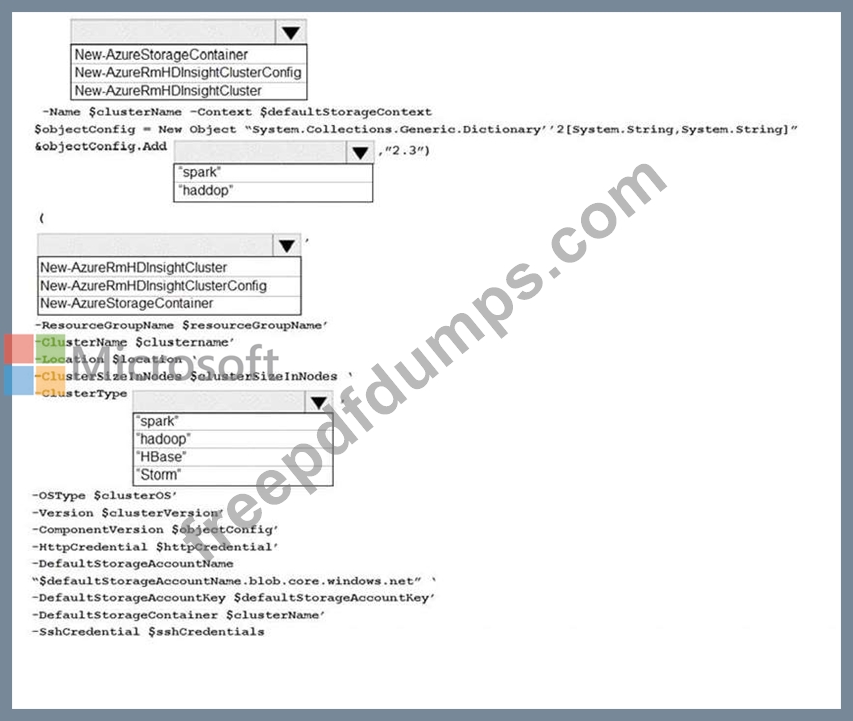

How should you complete the PowerShell segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

A project requires an in-memory batch data processing solution.

You need to provision an HDInsight cluster for batch processing of data on Microsoft Azure.

How should you complete the PowerShell segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-200 Exam Question 124

Your company analyzes images from security cameras and sends to security teams that respond to unusual activity. The solution uses Azure Databricks.

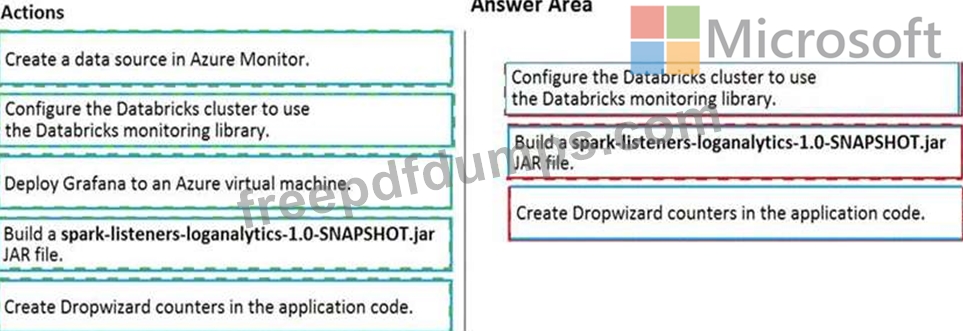

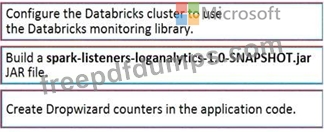

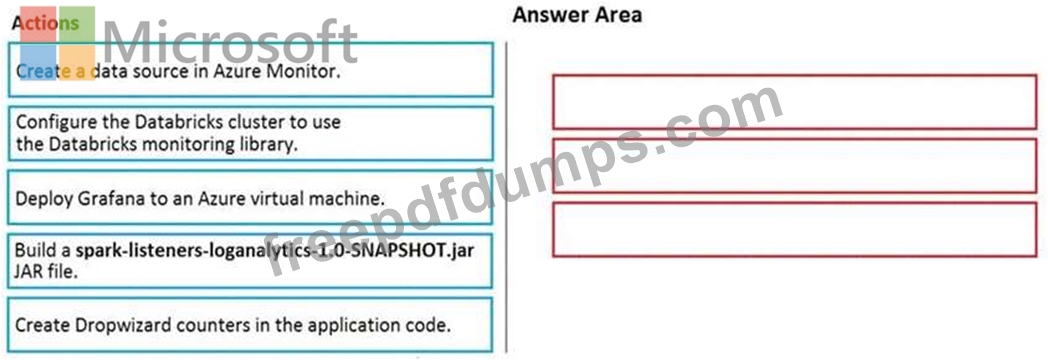

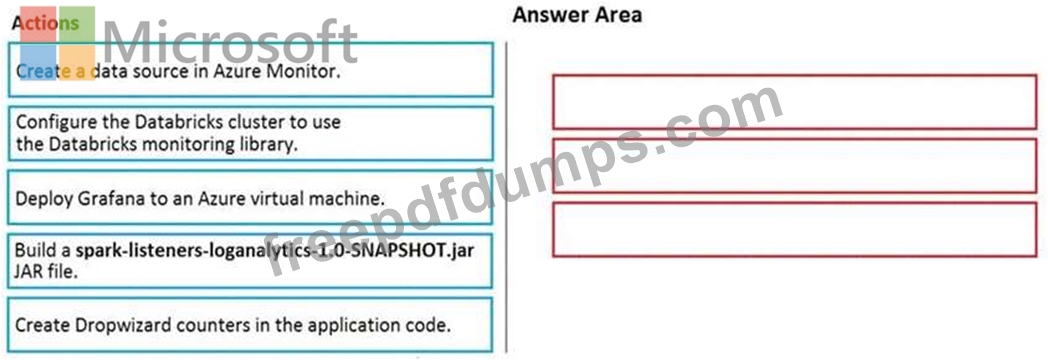

You need to send Apache Spark level events, Spark Structured Streaming metrics, and application metrics to Azure Monitor.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions in the answer area and arrange them in the correct order.

You need to send Apache Spark level events, Spark Structured Streaming metrics, and application metrics to Azure Monitor.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions in the answer area and arrange them in the correct order.

DP-200 Exam Question 125

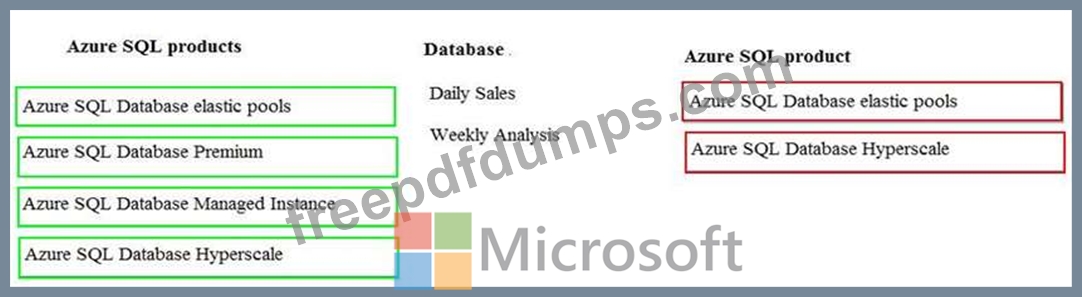

You are developing the data platform for a global retail company. The company operates during normal working hours in each region. The analytical database is used once a week for building sales projections.

Each region maintains its own private virtual network.

Building the sales projections is very resource intensive are generates upwards of 20 terabytes (TB) of data.

Microsoft Azure SQL Databases must be provisioned.

Database provisioning must maximize performance and minimize cost

The daily sales for each region must be stored in an Azure SQL Database instance

Once a day, the data for all regions must be loaded in an analytical Azure SQL Database instance

You need to provision Azure SQL database instances.

How should you provision the database instances? To answer, drag the appropriate Azure SQL products to the correct databases. Each Azure SQL product may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Each region maintains its own private virtual network.

Building the sales projections is very resource intensive are generates upwards of 20 terabytes (TB) of data.

Microsoft Azure SQL Databases must be provisioned.

Database provisioning must maximize performance and minimize cost

The daily sales for each region must be stored in an Azure SQL Database instance

Once a day, the data for all regions must be loaded in an analytical Azure SQL Database instance

You need to provision Azure SQL database instances.

How should you provision the database instances? To answer, drag the appropriate Azure SQL products to the correct databases. Each Azure SQL product may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.