DP-200 Exam Question 131

You need to develop a pipeline for processing data. The pipeline must meet the following requirements.

*Scale up and down resources for cost reduction.

*Use an in-memory data processing engine to speed up ETL and machine learning operations.

*Use streaming capabilities.

*Provide the ability to code in SQL, Python, Scala, and R.

*Integrate workspace collaboration with Git.

What should you use?

*Scale up and down resources for cost reduction.

*Use an in-memory data processing engine to speed up ETL and machine learning operations.

*Use streaming capabilities.

*Provide the ability to code in SQL, Python, Scala, and R.

*Integrate workspace collaboration with Git.

What should you use?

DP-200 Exam Question 132

A company plans to use Azure Storage for file storage purposes. Compliance rules require:

A single storage account to store all operations including reads, writes and deletes Retention of an on-premises copy of historical operations You need to configure the storage account.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A single storage account to store all operations including reads, writes and deletes Retention of an on-premises copy of historical operations You need to configure the storage account.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

DP-200 Exam Question 133

You implement an enterprise data warehouse in Azure Synapse Analytics.

You have a large fact table that is 10 terabytes (TB) in size.

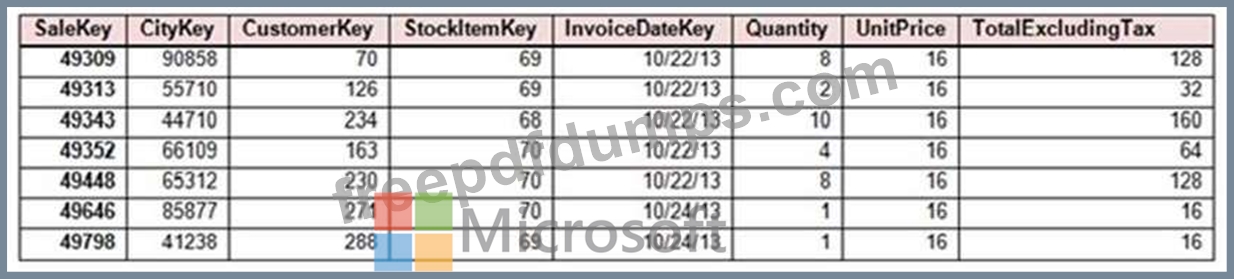

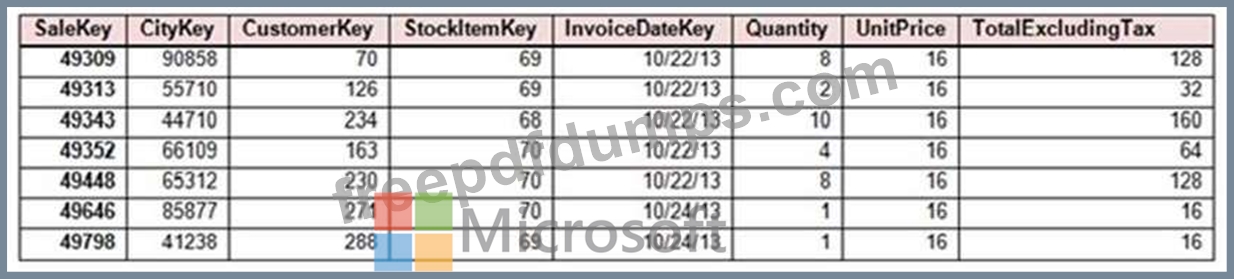

Incoming queries use the primary key Sale Key column to retrieve data as displayed in the following table:

You need to distribute the large fact table across multiple nodes to optimize performance of the table.

Which technology should you use?

You have a large fact table that is 10 terabytes (TB) in size.

Incoming queries use the primary key Sale Key column to retrieve data as displayed in the following table:

You need to distribute the large fact table across multiple nodes to optimize performance of the table.

Which technology should you use?

DP-200 Exam Question 134

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure subscription that contains an Azure Storage account.

You plan to implement changes to a data storage solution to meet regulatory and compliance standards.

Every day, Azure needs to identify and delete blobs that were NOT modified during the last 100 days.

Solution: You apply an Azure Blob storage lifecycle policy.

Does this meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure subscription that contains an Azure Storage account.

You plan to implement changes to a data storage solution to meet regulatory and compliance standards.

Every day, Azure needs to identify and delete blobs that were NOT modified during the last 100 days.

Solution: You apply an Azure Blob storage lifecycle policy.

Does this meet the goal?

DP-200 Exam Question 135

Use the following login credentials as needed:

Azure Username: xxxxx

Azure Password: xxxxx

The following information is for technical support purposes only:

Lab Instance: 10277521

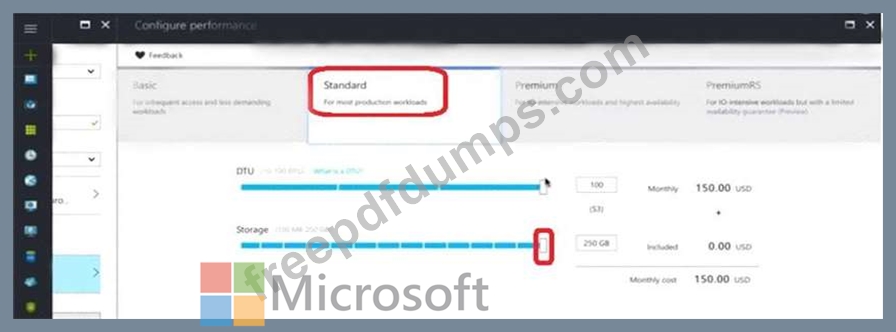

You need to increase the size of db2 to store up to 250 GB of data.

To complete this task, sign in to the Azure portal.