Associate-Developer-Apache-Spark-3.5 Exam Question 21

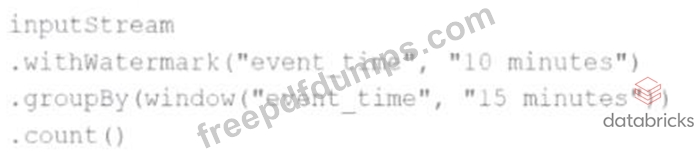

Given this code:

.withWatermark("event_time","10 minutes")

.groupBy(window("event_time","15 minutes"))

.count()

What happens to data that arrives after the watermark threshold?

Options:

.withWatermark("event_time","10 minutes")

.groupBy(window("event_time","15 minutes"))

.count()

What happens to data that arrives after the watermark threshold?

Options:

Associate-Developer-Apache-Spark-3.5 Exam Question 22

A data engineer is streaming data from Kafka and requires:

Minimal latency

Exactly-once processing guarantees

Which trigger mode should be used?

Minimal latency

Exactly-once processing guarantees

Which trigger mode should be used?

Associate-Developer-Apache-Spark-3.5 Exam Question 23

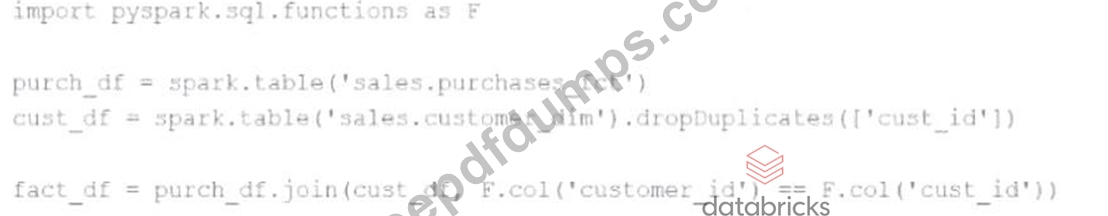

A developer is trying to join two tables,sales.purchases_fctandsales.customer_dim, using the following code:

fact_df = purch_df.join(cust_df, F.col('customer_id') == F.col('custid')) The developer has discovered that customers in thepurchases_fcttable that do not exist in thecustomer_dimtable are being dropped from the joined table.

Which change should be made to the code to stop these customer records from being dropped?

fact_df = purch_df.join(cust_df, F.col('customer_id') == F.col('custid')) The developer has discovered that customers in thepurchases_fcttable that do not exist in thecustomer_dimtable are being dropped from the joined table.

Which change should be made to the code to stop these customer records from being dropped?

Associate-Developer-Apache-Spark-3.5 Exam Question 24

A developer is running Spark SQL queries and notices underutilization of resources. Executors are idle, and the number of tasks per stage is low.

What should the developer do to improve cluster utilization?

What should the developer do to improve cluster utilization?

Associate-Developer-Apache-Spark-3.5 Exam Question 25

Given the code:

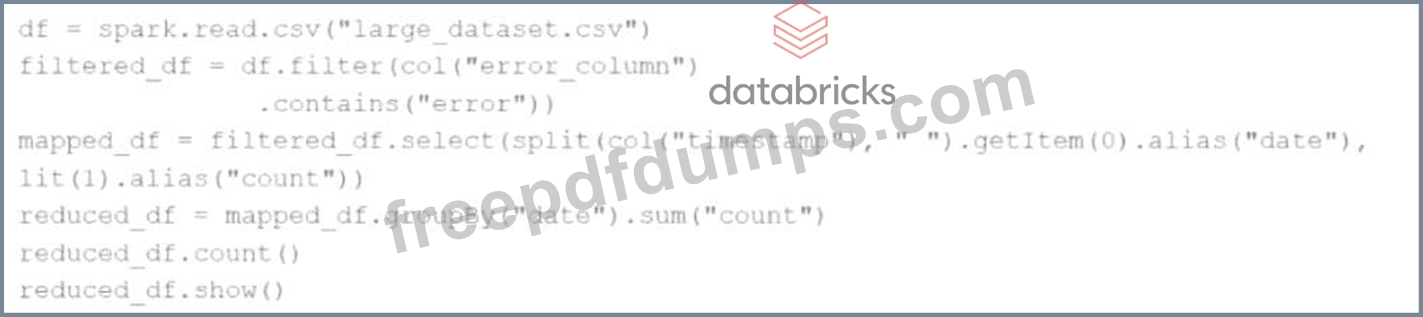

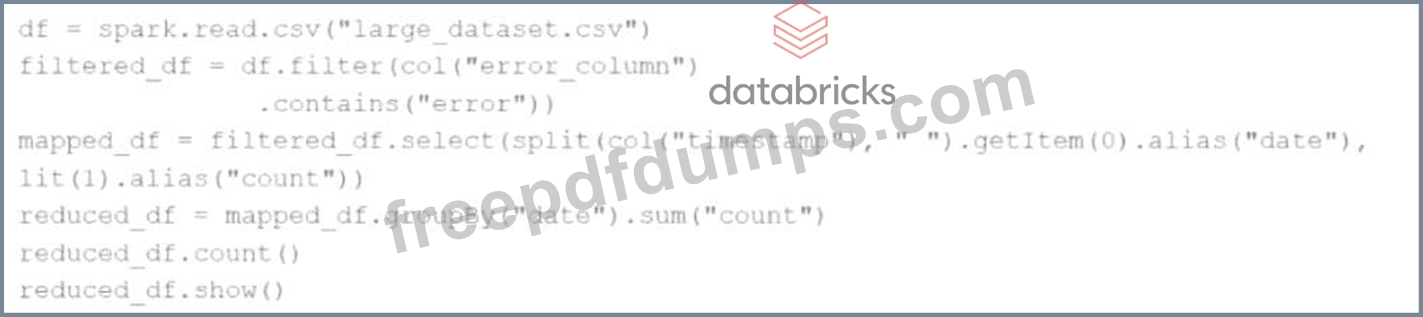

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp")," ").getItem(0).alias("date"), lit(1).alias("count")) reduced_df = mapped_df.groupBy("date").sum("count") reduced_df.count() reduced_df.show() At which point will Spark actually begin processing the data?

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp")," ").getItem(0).alias("date"), lit(1).alias("count")) reduced_df = mapped_df.groupBy("date").sum("count") reduced_df.count() reduced_df.show() At which point will Spark actually begin processing the data?